Can I Upload Data to Snowflake Without Command Line?

This series takes you from goose egg to hero with the latest and greatest cloud data warehousing platform, Snowflake.

Our previous mail service in this series explained how to import data via the Snowflake user interface. You tin also stage your data and load it into tables with code via SnowSQL, which is what this weblog post will demonstrate.

Setting the Stage for SnowSQL

In that location are many ways to import data into Snowflake, and utilising code allows users to automate the process. To import data into a Snowflake phase using SnowSQL, the following requirements must be met:

- SnowSQL must be installed on the user's car

- The data must be in a suitable file format

- A warehouse in Snowflake must exist to perform the import

- A destination database must exist in Snowflake

- A destination phase must be inside the Snowflake database

To load information from a Snowflake stage into a table, the following additional requirements must exist met:

- A destination table must exist within the Snowflake database

- A suitable file format must be within the Snowflake database

Installing SnowSQL

SnowSQL is the command line interface tool that allows users to execute Snowflake commands from within a standard terminal. This ways users do not need to be within the Snowflake UI to prepare and execute queries. It is also noteworthy that sure commands cannot exist executed from within the Snowflake Worksheets environs but tin exist executed via SnowSQL, with the nearly noteworthy existence the control to import data into a Snowflake stage.

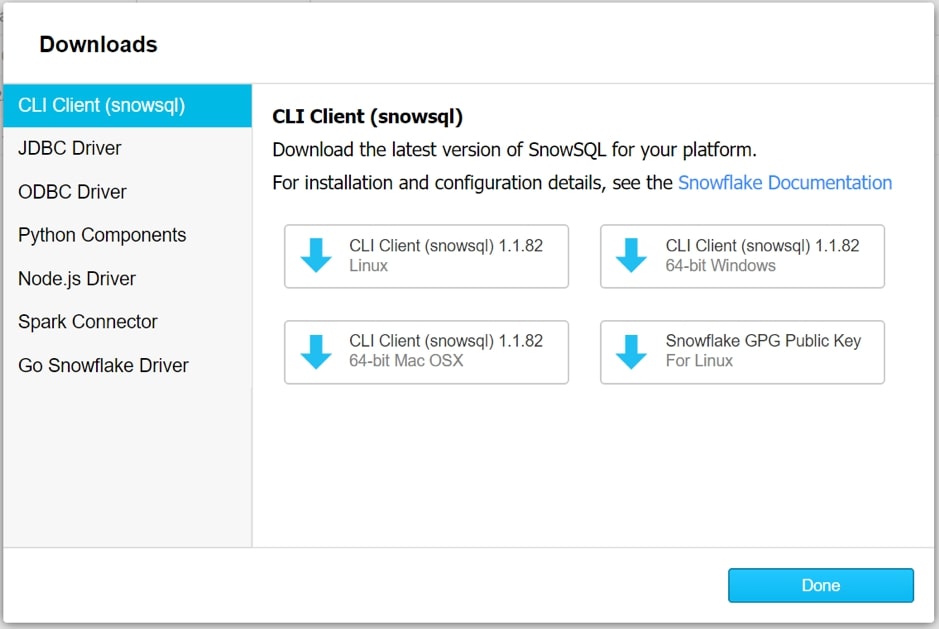

Before we can start using SnowSQL, nosotros must install it. From within the Snowflake UI, the Assist push allows users to download supporting tools and drivers:

As our goal is to install the SnowSQL Command Line Interface (CLI) Client, we select this from the list and choose the appropriate operating system:

Once the installer downloads, execute it and run through the installer to install SnowSQL. You may need to enter admin credentials to install the software.

Verifying the Installation of SnowSQL

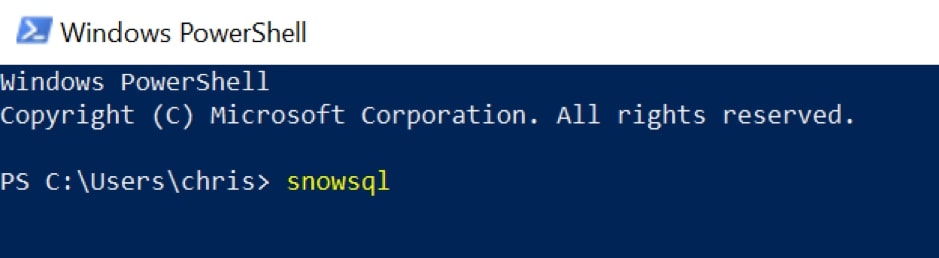

Once SnowSQL is installed, it can be chosen from within any standard terminal. For this example, we volition be using Windows Powershell; however, we could equivalently use whatsoever other final depending on the operating organization:

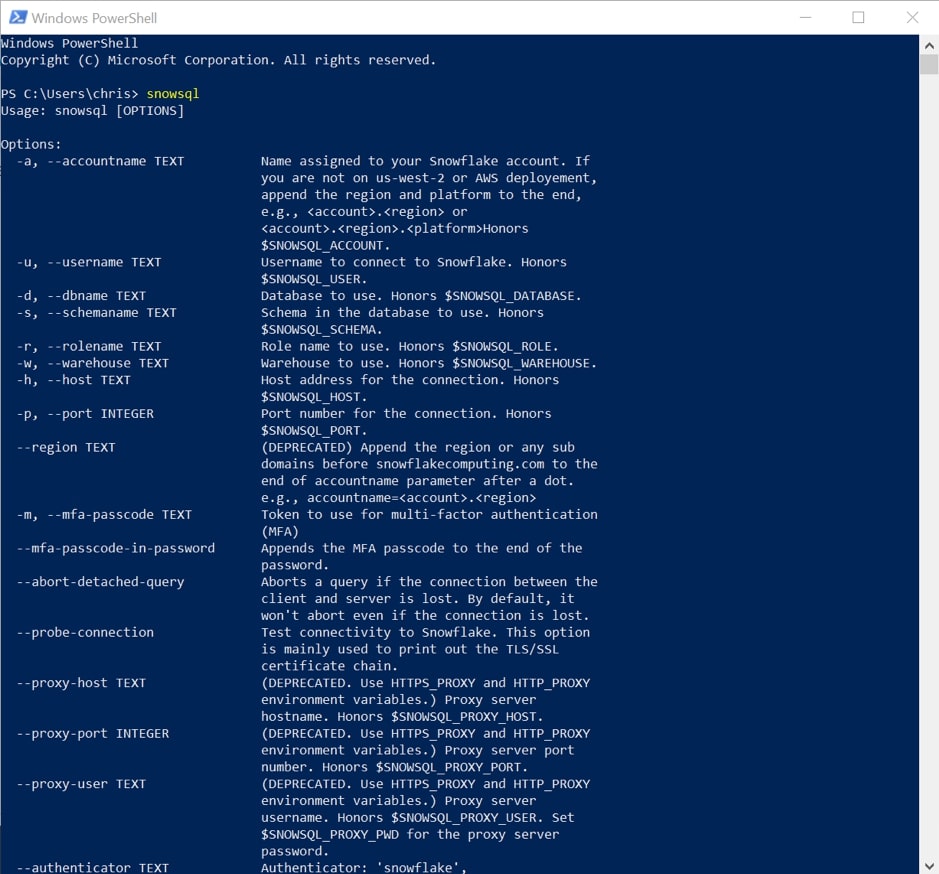

Brainstorm by executing the control SnowSQL to verify the installation. This will list the various options which tin can be used within SnowSQL:

Preparing Data for Import

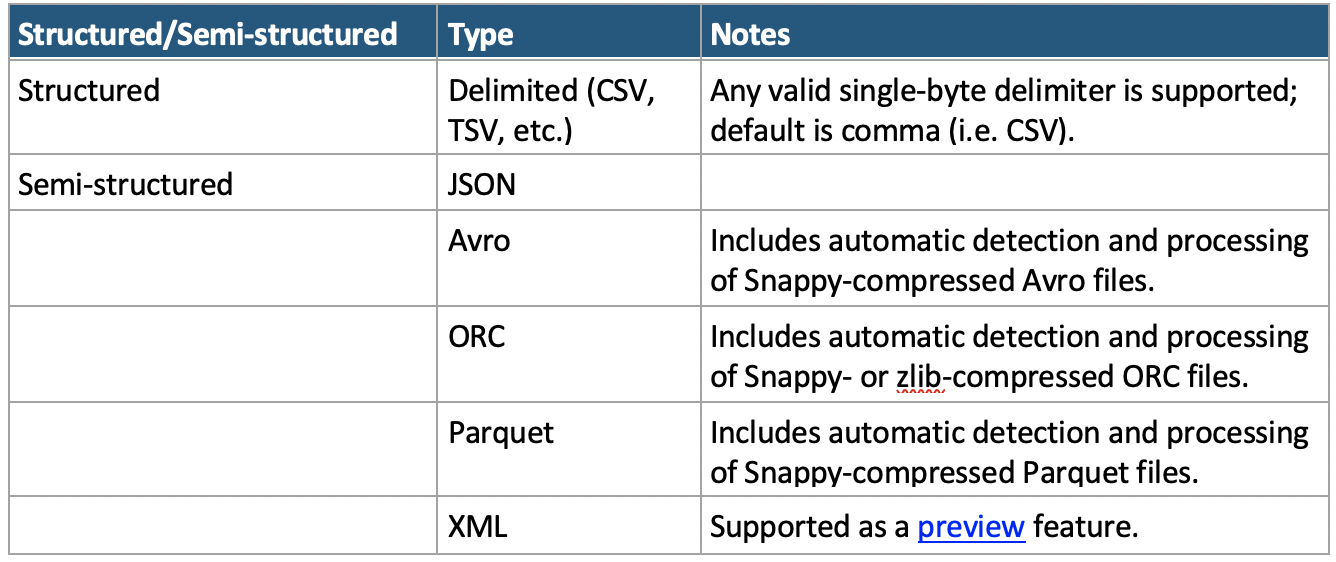

Before nosotros tin import whatsoever data into Snowflake, it must starting time be stored in a supported format. At time of writing, the full list of supported is contained in the tabular array below. An up-to-date list of supported file formats can be found in Snowflake's documentation:

*Note: The XML preview feature link can exist accessed here

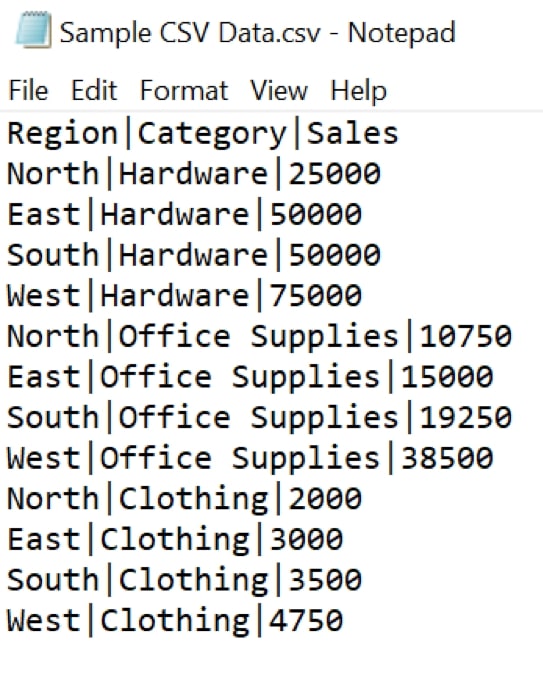

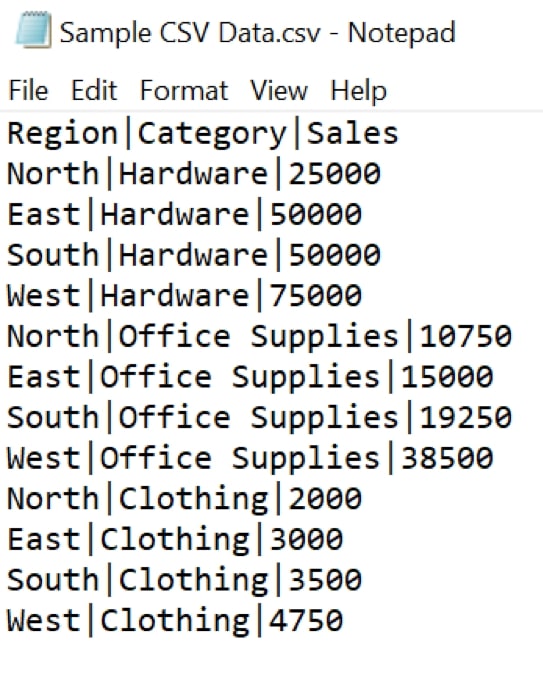

For this example, we will load the post-obit structured data which is delimited with vertical bars:

Using SnowSQL to Execute Commands

The first step toward utilising SnowSQL is to establish a connexion with your Snowflake environment. Three pieces of information are required to establish a connectedness:

- The Snowflake business relationship to connect to, including the region

- An existing user within that account

- The corresponding password for that user

As Snowflake is a cloud service, each account is accessed via a URL. For example, InterWorks' business relationship in the EU is accessed via https://interworks.european union-fundamental-1.snowflakecomputing.com.

This URL is formed by https://<Account>.<Account_Region>.snowflakecomputing.com.

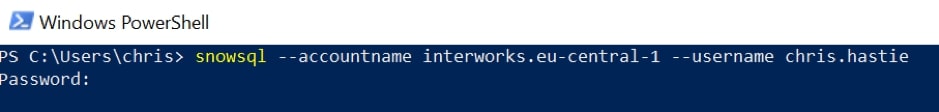

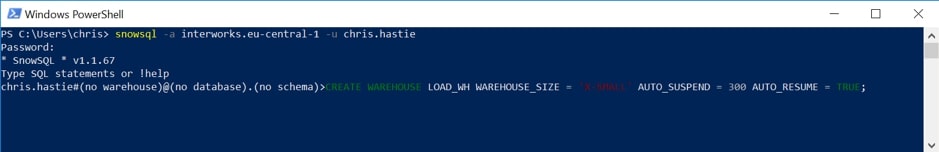

Therefore, we can come across that our account is interworks.eu-central-one. Note that we are still using Windows Powershell; however, this can be achieved in any equivalent terminal. Nosotros enter business relationship using the –accountname option in SnowSQL, along with my user chris.hastie with the –username pick:

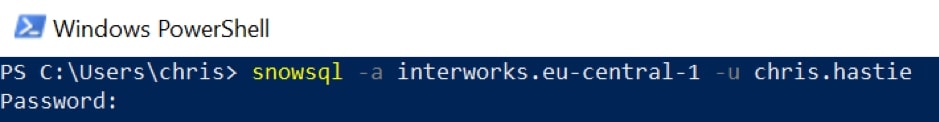

We tin besides use the shorter names for these options, respectively -a and -u:

In both situations, a countersign prompt will announced. Enter the password for the desired user. Practice not be alarmed if your terminal does not display the password—it volition still exist reading the input.

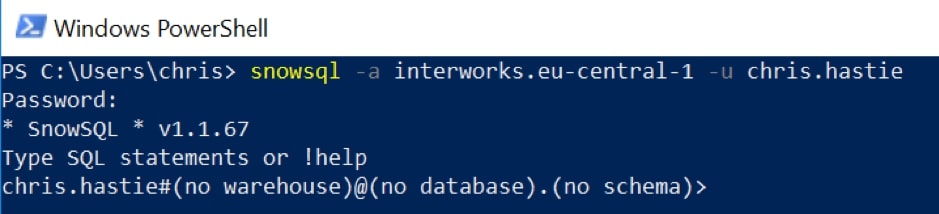

With the connection established, SnowSQL volition output the current user forth with the electric current warehouse, database and schema. We have non all the same selected any, which is why they are all left as none selected:

Preparing a Warehouse

We encourage Snowflake users to examination their data loads with various warehouse sizes to find their optimal balance between credit cost to performance. As nosotros are loading a very small-scale file in a bespoke example, we will use an extra-pocket-size warehouse chosen LOAD_WH.

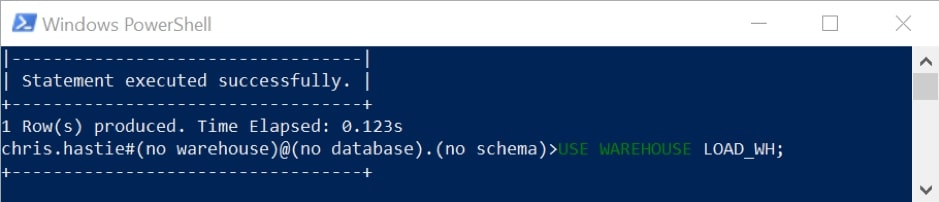

If the warehouse does non exist, it can be created with the CREATE WAREHOUSE control. Note that we have determined the size as actress small, along with setting the AUTO_SUSPEND to 300 and the AUTO_RESUME to TRUE. This ways that our warehouse will automatically suspend itself if it has not been used for five minutes, eliminating costs when the warehouse is unused:

Upon successful execution, SnowSQL volition output that the warehouse has been created:

One time the warehouse is created, it can be used with the Utilize WAREHOUSE command:

Nosotros can at present see our current warehouse in our connection string direct after the username:

Preparing a Database

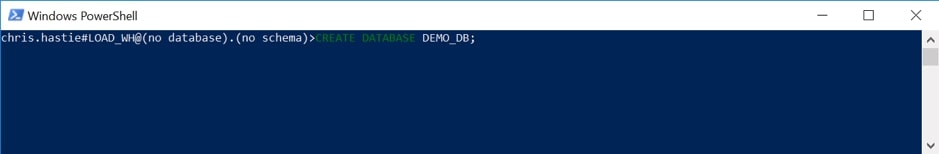

In a like fashion to warehouses, users can create databases via the CREATE DATABASE control. For this example, we volition create a databased called DEMO_DB:

With this database created, our connexion volition automatically update to apply this database. DEMO_DB is at present provided in the connection cord, along with the default PUBLIC schema:

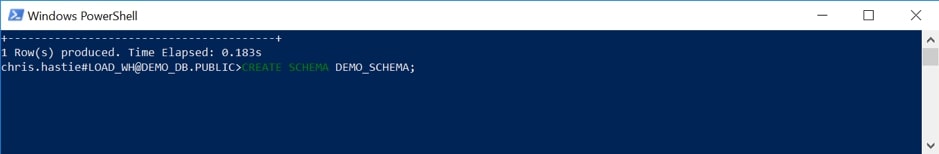

For the sake of uniformity, we volition also use the CREATE SCHEMA command to create a new schema called DEMO_SCHEMA:

Our connection cord has now updated to brandish that we are connected to the DEMO_SCHEMA schema within our DEMO_DB database:

Preparing a Stage

Stages are Snowflake'south version of storage buckets. Each stage is a container for storing files and can be either an internal phase within Snowflake or an external stage inside Amazon S3 or Azure Blob storage.

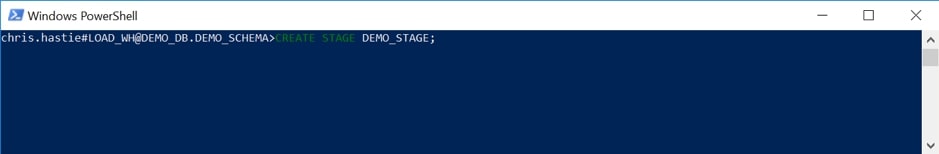

For this instance, we will create a elementary internal stage within Snowflake using the CREATE Stage control. Our stage will be called DEMO_STAGE:

If our data was stored in Amazon S3 or Azure Blob storage, we would require authentication credentials to the storage location along with the URL. These can be entered as office of the CREATE STAGE control, equally in the post-obit case:

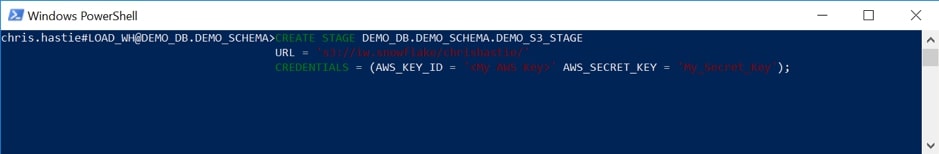

Finally, nosotros can import our data into our phase using the PUT command. More information for this command and other SnowSQL commands can be found in Snowflake'southward documentation. A key slice of information is that the PUT command can only be executed from outside the primary Snowflake interface, which is why we are using SnowSQL instead.

Information technology is important to get the file location string correct as this may vary depending on the terminal you are using. Note that the phase is determined using an @ sign:

After performing the import, SnowSQL will output some central information. This includes the name of the file, its size and the status of the import:

At this stage, our file has now been successfully imported into a secure and encrypted stage in Snowflake.

Preparing the Destination Table

At present that our information is secure in a Snowflake phase, we tin think nigh how we want to view it. In gild to load information from a stage into a table, we first must create the table and a file format to lucifer our data.

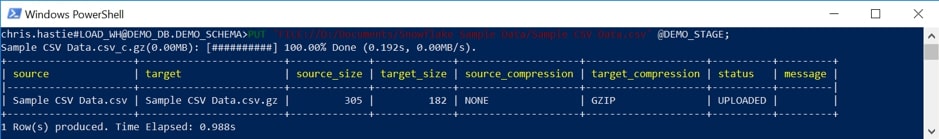

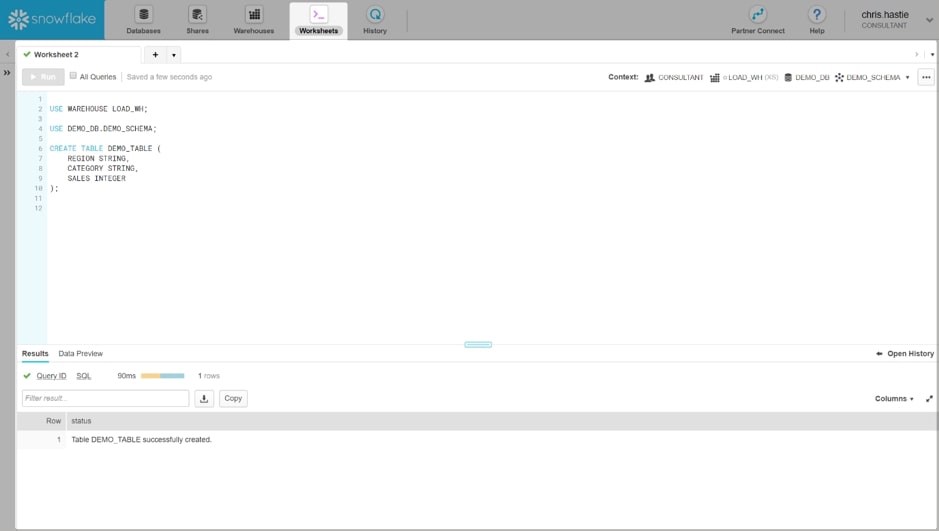

The post-obit steps could withal exist performed from within the SnowSQL command line interface; however, we will instead perform the remaining steps in Snowflake itself via the Worksheets functionality. We can do this considering the remaining commands that we wish to execute tin all exist performed from within Snowflake, in contrast to the previous PUT command which could not.

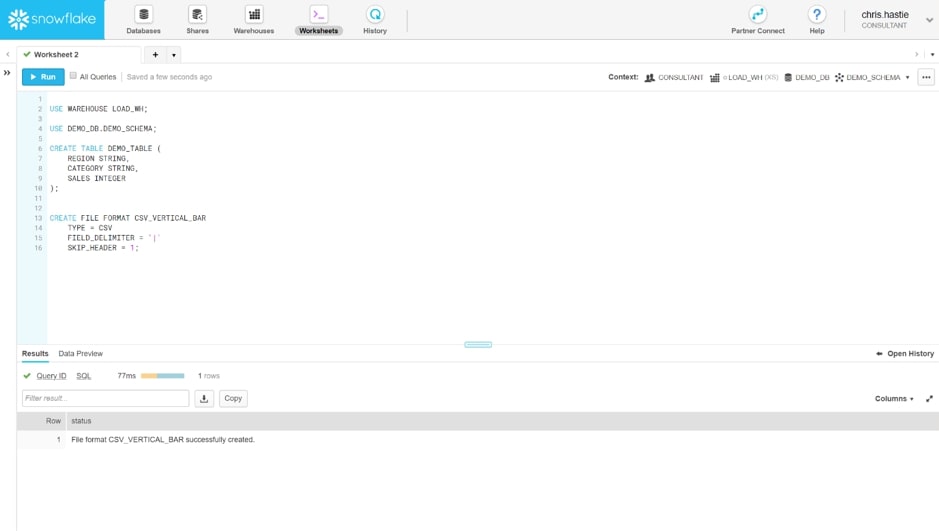

To create our table, we first ensure that we are using the correct warehouse, database and schema. We can then use the CREATE TABLE command to create our table as nosotros would in any SQL-compliant information warehouse:

Preparing the File Format

With our destination table created, the terminal slice of the puzzle before we load our data is to create a file format. As mentioned earlier, our data is stored as a vertical bar delimited CSV file:

Using the CREATE FILE FORMAT command, we tin create a file format called CSV_VERTICAL_BAR. We decide that the file type is CSV, which instructs Snowflake to look for a structured text file with delimited fields. Our field delimiter is a vertical bar and skips the first row of information. Nosotros practise this every bit the beginning line of data in our file contains the cavalcade names, which nosotros practice non desire to import into our table in Snowflake:

Loading the Data from the Stage into the Table

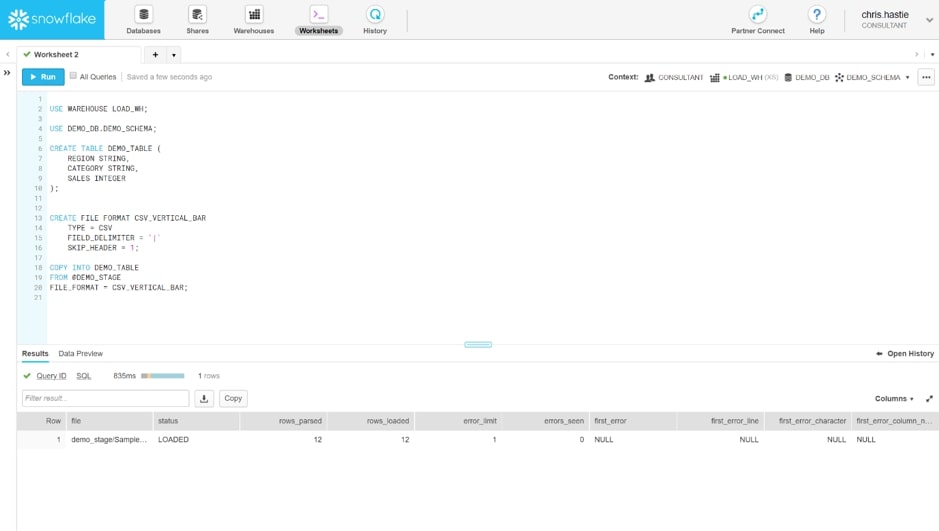

Now that we have all of our pieces lined up, we can use the COPY INTO command to load our information from the stage into the destination table. As with all commands, full data tin be constitute within Snowflake's documentation:

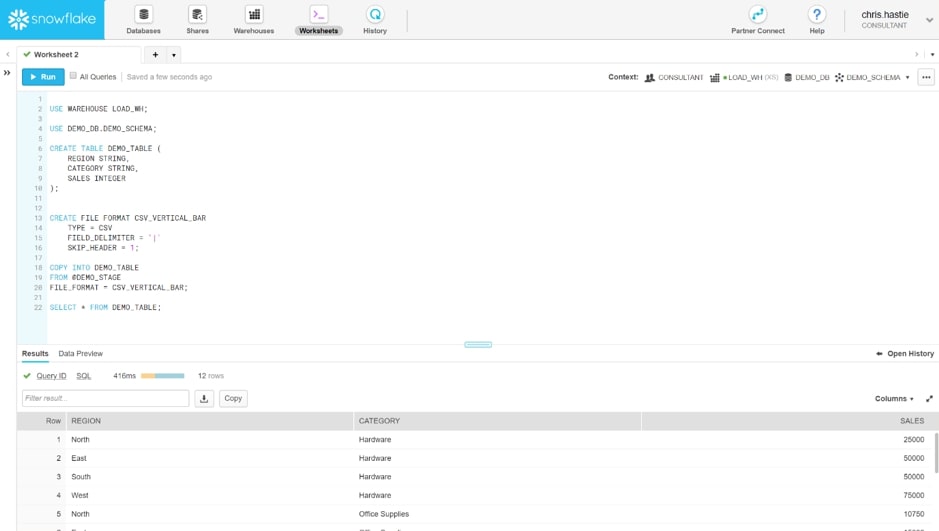

The output informs the states that the file loaded successfully, parsing and loading all 12 rows. Nosotros tin can verify this with a standard SELECT control from our table:

Source: https://interworks.com/blog/chastie/2019/12/20/zero-to-snowflake-importing-data-with-code-via-snowsql/

Belum ada Komentar untuk "Can I Upload Data to Snowflake Without Command Line?"

Posting Komentar